Week in OSINT #2018-21

After last week's Week in OSINT I received a lot of positive feedback, so I gave my best to round up some more interesting sites, tools and news for you.

Let's first start with a quick overview of the topics:

- GDPR and WHOIS

- Tweetdeck, Feedreader and Chirp

- Scumblr

- OSINT on IP addresses

- African OSINT sources

- ImgOps reverse image tool

- Reaper

- Blog by Justin Seitz

- Tutorials by Oliver Klein

- Bellingcat report about MH17

News: GDPR and WHOIS

On May 25 the GDPR came into effect and until that day a lot of people didn't know what the exact impact would be within certain areas of OSINT research. One particular thing a lot of people were paying attention to, was what would happen with the WHOIS data. I do realize that there are times a target would be hidden behind a proxy service, but there were, and still are, cases where WHOIS data can be very valuable.

After years of stalling, ICANN finally announced a few days before the deadline that the publicly available WHOIS data would be stripped from personal information and would only return registrant, administrative, and technical contacts of the generic TLD's. But during some testing I found out that a lot of websites even strip that information and don't give back any information at all, rendering them completely useless. More well known sites like ViewDNS and RobTex still work as expected, and give the same output as a query via the command line.

But when I browsed around a bit to read up a little on the subject, I stumbled upon the website of RiskIQ. They took an approach that was slightly in favour of Threat Hunters but would still be protecting the information of innocent people. I wonder whether it is legally compliant towards the GDPR, since the hash is a unique footprint used to identify an email address or person, but this at least makes it possible to browse further on a possible lead:

'For the platform and across our products, what this means is that depending on your needs and level of access, some personal data contained in WHOIS records from RiskIQ PassiveTotal will not necessarily be available to inspect. In some cases, you will eventually have the ability to pivot from a hashed phone number or email address, but you may not need to see the actual phone number or email address in every investigation, on all occasions. When the unmasked data is needed, we intend for this information to be available, subject to certain safeguards and controls.'

I think we all have to see how this all plays out in the end, but traces of email addresses can still be found everywhere on the internet and not just in the WHOIS data. So there are still enough options available to keep digging. And if you really need WHOIS data during an important step in your investigation, don't forget that people that work at a registrar are still able to pull up the needed information for you.

Tutorial: Tweetdeck and Feedreader

Right after last week's "Week in OSINT" I stumbled upon a blog of Jake Creps that holds a few articles, mostly aimed at beginners, about ‘dashboarding' your OSINT information. Not all articles were finished while writing this, but I did find at least two very interesting topics that I wanted to point out this week.

The first article is about how to set up Tweetdeck for gathering information. Jake the goes over all the basic settings and columns you should or could add to create an overview that can help you make the most of this tool. Even though there isn't a lot to explain about Tweetdeck itself, we all known it can be a very powerful tool when doing research. I've been using it for years and I love the simplicity and the clear overview it gives me. But if you are new to this, just give this article a read and try it out for yourself!

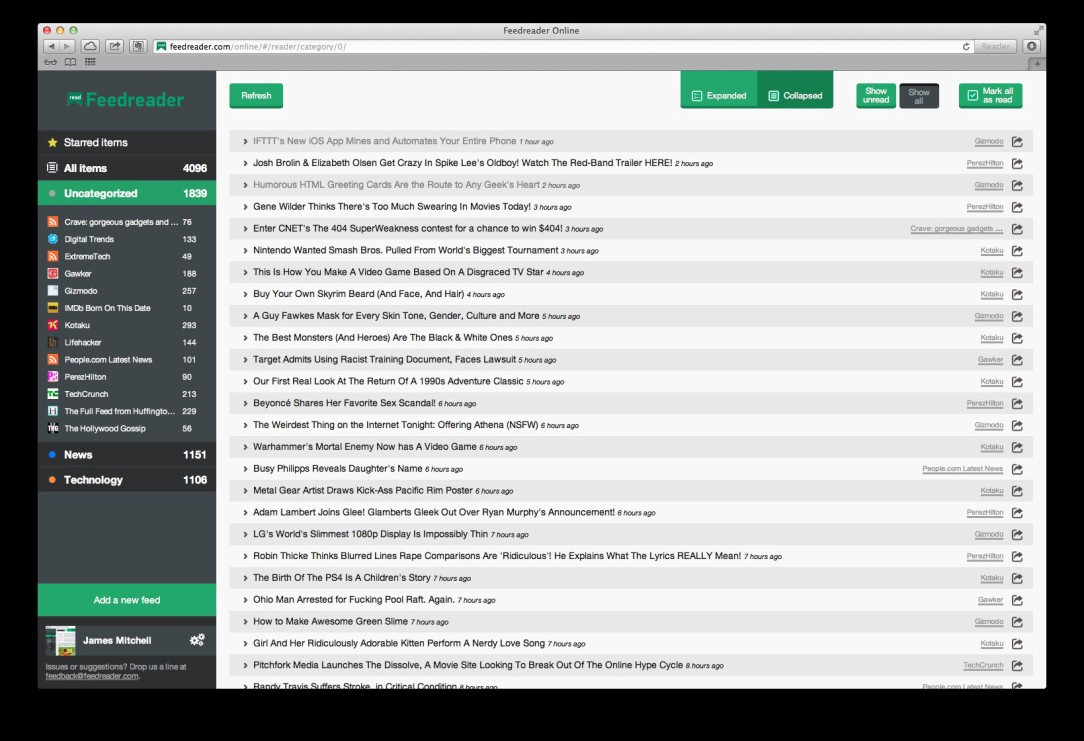

Another nice article deals with Feedreader and how you can set it up to gather information via RSS feeds. Jake explains how to sign up and create a dashboard, how to import feeds and gives some tips on what to include. I have my own feed aggregator hosted somewhere, purely for security related news, but this is a whole new approach to me l and may actually have to give this a try.

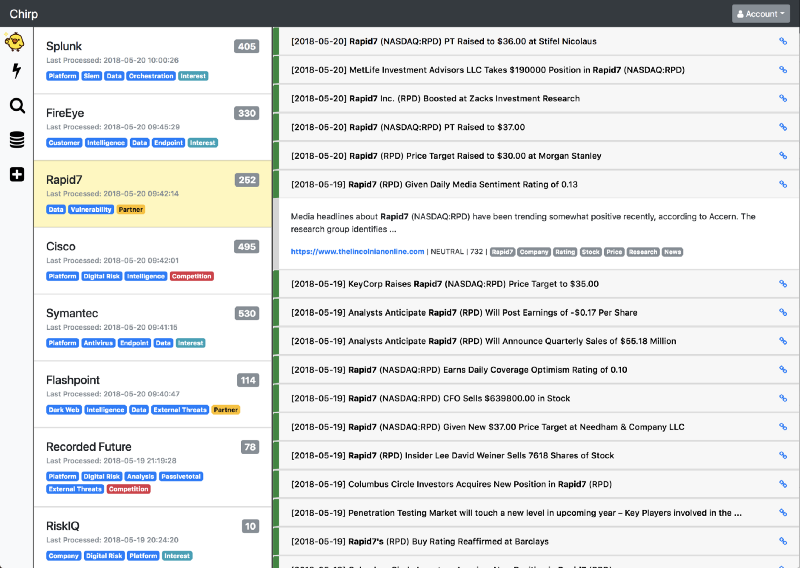

And if you would rather host something yourself that uses Google alerts to provide you with the needed intelligence, there is another project that might just be what you've been looking for. We step away from Jake's blog and have a look at a project that uses a custom API to sift through the Google alerts, after which the information is stored in a local MongoDB with the use of containers and Celery. The project is still in development, but looks very promising! For the techies that need some more info, just have a look at the project at GitHub.

Tweetdeck: https://jakecreps.com/2018/05/19/tweetdeck/

Feedreader: https://jakecreps.com/2018/05/20/feedreader/

Chirp: https://github.com/9b/chirp

Tool: Scumblr

While writing the previous section I was going over a few sites that deal with gathering and handling information on a larger scale. And that's when I stumbled upon an open source tool from Netflix that is called Scumblr. This open source web application enables you to periodically search for data within multiple sources and was initially targeted towards application security and vulnerability management.

But Netflix did a god job designing a tool that can be used in more areas and I recognized the potential in regards to automating OSINT. Especially in cases with new information coming in constantly from online sources. I wasn't the first person with this idea though, because after some browsing I found Gary Hoffman's write-up on how to use the tool for this task. If I made you curious, just read "Automated OSINT with Scumblr".

After reading that article and going over their Wiki, I can only say that in my opinion Scumblr is a serious tool to look at. Especially if you need a way to gather a constant stream of online information, store and classify it, and afterwards run all sorts of queries on them. And as Gary Hoffman also pointed out: You won't get hit by the Google Captcha's for crawling activity!

Link: https://portunreachable.com/automated-osint-with-scumblr-a4d81a048e54

Scumblr on GitHub: https://github.com/Netflix/Scumblr

Blog: OSINT on IP addresses

This week I opened up Micah Hoffman's blog and read an article from David Mashburn about querying ARIN for information on IP addresses. One of the issues David mentioned was the occasional reply that information was stored at a different registrar, for instance at AFRINIC.

He found out that RIPE was providing him with an option to indirectly query the registrar in question via a single command line. A nice write-up about IP addresses and how to extract information via the command line, so have a look at his blog, it is worth a read. And I never heard about the tool jq to extract data parsed in JSON format, so I might have a look at that.

But if you need such information fast and don't want to query several registrars in the process, directly or indirectly, there are alternatives like https://ipinfo.io. As long as you don't make more than 1000 API calls per day, then this is everything you need if you need the most basic information. I know this is not the same as querying a registrar itself, since there is no guarantee that the information is trustworthy, but in case of need a double check is all you need:

sector035@host:~$ curl https://ipinfo.io/197.220.169.143

{

"ip": "197.220.169.143",

"city": "Accra",

"region": "Greater Accra Region",

"country": "GH",

"loc": "5.5500,-0.2167",

"org": "AS37341 GLO MOBILE GHANA LTD"

}As stated this does only give you the most basic information back without the need of an API key. And even though it is limited at previous mentioned 1000 requests per day, that should be enough for most investigations. If you want some more information, there is another wonderful API at https://api.ipdata.co that gives you an abundance of information back, though not all useful, and gives you 1500 API calls a day for free:

sector035@host:~$ curl https://api.ipdata.co/197.220.169.143

{

"ip": "197.220.169.143",

"city": "Accra",

"region": "Greater Accra Region",

"region\_code": "AA",

"country\_name": "Ghana",

"country\_code": "GH",

"continent\_name": "Africa",

"continent\_code": "AF",

"latitude": 5.55,

"longitude": -0.2167,

"asn": "AS37341",

"organisation": "GLOMOBILE",

"postal": "",

"calling\_code": "233",

"flag": "[https://ipdata.co/flags/gh.png](https://ipdata.co/flags/gh.png)",

"emoji\_flag": "\\ud83c\\uddec\\ud83c\\udded",

"emoji\_unicode": "U+1F1EC U+1F1ED",

"is\_eu": false,

"languages": \[

{

"name": "English",

"native": "English"

}

\],

"currency": {

"name": "Ghanaian Cedi",

"code": "GHS",

"symbol": "GH\\u20b5",

"native": "GH\\u20b5",

"plural": "Ghanaian cedis"

},

"time\_zone": {

"name": "Africa/Accra",

"abbr": "GMT",

"offset": "+0000",

"is\_dst": false,

"current\_time": "2018–05–27T12:47:39.782926+00:00"

},

"threat": {

"is\_tor": false,

"is\_proxy": false,

"is\_anonymous": false,

"is\_known\_attacker": false,

"is\_known\_abuser": true,

"is\_threat": true,

"is\_bogon": false

}

}Ipdata is aimed towards threat intelligence and that explains the added extra information like whether it is a known attacker, Tor node, proxy or such. A very nice alternative in regard to OSINT research on IP addresses, so maybe have a look at that one too.

Link: https://webbreacher.com/2018/05/21/mining-for-osint-gold-rir-data-via-api/

Link: https://ipinfo.io/

Link: https://ipdata.co/

Source: African OSINT sources

Two weeks ago Ludo Block started sharing links on Twitter aimed towards OSINT sources in Africa. I am going to keep this short and let his tweets do the talking. Do make sure you keep an eye out on his timeline if you are looking for such information, because it wouldn't surprise me there is a lot more to come!

Website: ImgOps

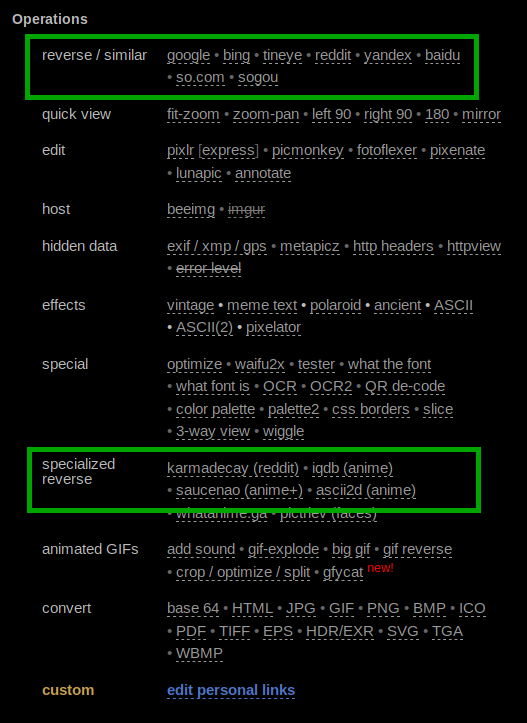

One of the last minute additions of this "Week in OSINT" is the website ImgOps. I have used it before since it came up during one of Quiztime challenges, so it was nice to be reminded of it again. The tool is effective in its simplicity to be honest. You paste an image URL or upload a photo, and you are presented with a menu full of options:

The most interesting options for us will of course be the reverse/similar all the way on top and the specialized reverse. With one click you can do a reverse search on sites like Bing, Google, and Yandex but also fire up some specific searches on for instance Reddit. Another few options that might be useful are the OCR function or recognizing fonts. The only thing you do have to remember is that this is an online tool, so when uploading an image it might end up somewhere online even if it is only cached. So please keep that in mind when having a look at it and make sure to not upload sensitive material.

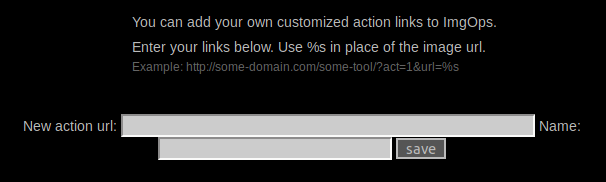

One very nice addition is the use of bookmarklets. Via the bookmarklet you get a full list of all images on a page you are visiting and with one click it will be opened in the ImgOps website for further usage. And besides that, you also have the option to add custom links, which will be saved via local cookies (so don' t throw them away if you want to use that)

Link: http://imgops.com/

Tool: Reaper

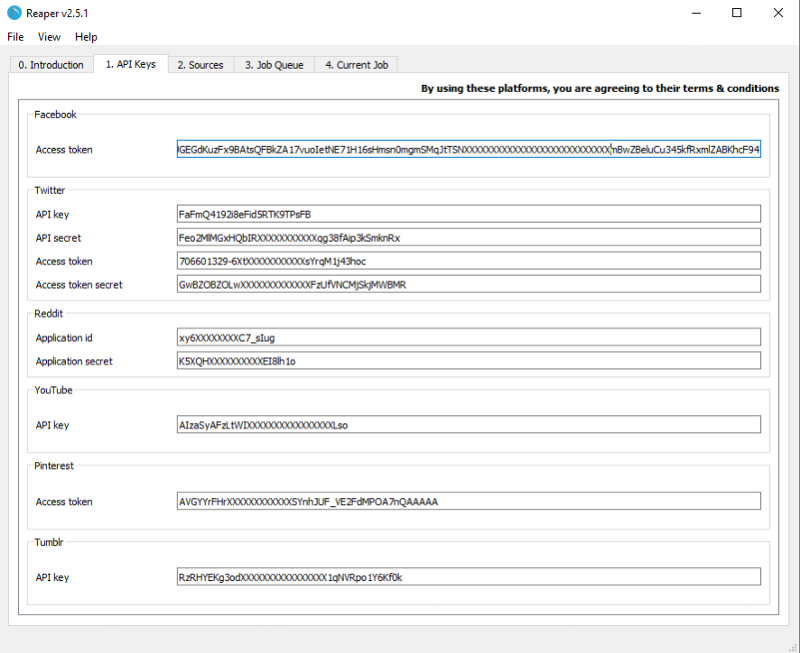

Last Friday Dutch OsintGuy pointed me to a tool I didn't know about yet, and it is called Reaper.

This is a tool that can scrape several social media platforms by using API keys. You just provide the needed tokens, some search criteria and the tool does the rest. For now, it can gather information from Facebook, Twitter, Reddit, YouTube, Pinterest, and Tumblr but there is more to come.

One thing I appreciated is the fact that Reaper comes with installers for Windows and Mac, but also have the option to run native on Linux. Furthermore, they have an extensive set of documentation at https://reaper.social including some very wonderful pieces of information, like how to convert the timestamps from all different platforms.

Link: https://github.com/scriptsmith/reaper

Link: https://reaper.social

Blog: Jumping to conclusions by Justin Seitz

I suspect that many of you may have already read the latest blog entry by Justin Seitz. It is about drawing conclusions from findings and the outcome when making wrong assumptions. We all know it is of utmost importance that every finding that might eventually be used in a case is validated and can actually stand up in court. This article reminds us about how important verification and corroboration is and that drawing conclusions does not always play out the way one intended.

Link: https://medium.com/@hunchly/jumping-to-osint-conclusions-a97fc5e623f4

Blog: Investigating people by Oliver Klein

Oliver Klein has overhauled his guide regarding investigating people online, so if your German is good enough, or you trust a translation service, do check out his article here. What I like is the fact he split his investigative steps into different sorts of searches or steps.

The first part is about finding people of which you have a name, but suspect they use a false name online, or when you are researching someone with a very common name. The second part goes further about how to find more information using social media via real names, or via nicknames, found that connect to the target. And the last part handles with searching usernames from email addresses within several social media platforms.

The things he mentions in his article might all be logical and known to a lot of investigators, but what I really appreciate is the fact that he created lists of steps you can take, sites you can use and almost creating a general workflow for regular investigations. And I think it is always a good idea to have overviews like this, that you can use as a checklist or cheat sheet during an investigation.

Link: http://rechercheseminar.de/leitfaden-investigative-personenrecherche/

News: MH17 report by Bellingcat

The biggest OSINT related news story from last week is of course the release of a report and the first ever press conference that Bellingcat held on Friday, May 25. A joint investigation by the Bellingcat team, together with The Insider and McClatchy DC Bureau, concluded that there is a very high probability that formerly unknown person of interest called "Oreon" is Oleg Ivannikov.

This adds another suspect to the list of people that are being investigated for shooting down the Malaysian airliner MH17 in July 2014. Bellingcat had been working on the MH17 case from day one, looking for anything related to the conflict in the Ukraine and have already posted multiple findings regarding the BUK installation and its Russian crew. This is another positive step in this long-running investigation of this tragedy.

That was it for this week! If you have any tips regarding new tools, websites or news articles then contact me over Twitter or simply add me in a retweet!

Have a good week and have a good search!