About Black Box (CL)OSINT Tools

Within the last decade or so I have the feeling that 'OSINT' simply has become a buzzword, and loads of companies and startups want to jump on the bandwagon to try and earn some extra money with it. But with that, I also noticed a very dangerous development within the field of open source intelligence: Every so often an online platform pops up, claiming they are the best online 'OSINT tool', but what are these so called 'OSINT tools' exactly?

With an abundance of these 'black box' intelligence products, I see that people are mistaking this for the practice of open source intelligence. Nowadays, I have to admit that often I find myself talking about 'investigating using open sources', or 'internet research', instead of using the acronym OSINT. Simply to emphasise the fact I am using open sources to collect my data that I might need for my investigations, and leave the word 'intelligence' out of the conversation all together. And yes, I do share all sorts of tools within Week in OSINT, but I usually don't share those 'black box' platforms, or I might even write a warning about it within my article. In this blog post, I want to try and explain what my issues are with this development and these tools.

What is OSINT?

If we look at the definition of OSINT on Wikipedia, there are several definitions. The U.S. Department of Defence describes it as:

The NATO has a slightly different definition, namely:

Just below that, there is another definition by Mark M. Lowenthal, the former Assistant Director of Central Intelligence for Analysis:

So there are several slightly different definitions, but they have one thing in common: The collecting of publicly available information, to create intelligence.

Definition of Terms

Before I continue, I would like to explain a few terms, that are important for this article. Decades ago, I learned in school there is a difference between data and information, so it might be time to recap this information before I dive into the rest of this article.

Data

Data is a collection of values, in computer science usually a bunch of zeros and ones. It can be described as raw, unorganized and unprocessed information. To use an analogy, you can see this as the raw ingredients of a recipe.

Information

At the moment we are processing, organising and interpreting data, we give it context and it becomes information. Where data consist of the raw ingredients, this is the dish you have prepared with it after processing everything.

Intelligence

Intelligence is the actual knowledge or insights derived after analysing, synthesising, and interpreting of this information. Within OSINT, by combining all information that was gathered, we are able to uncover new leads. And that is the 'intelligence' which is being produced within the OSINT lifecycle. In our analogy, this is learning how our newly created dish actually tastes.

Most of the time the terms data and information is actually used interchangeably, but to make this article complete, I wanted to mention it. What is more important, is that any new information that we uncover, and that teaches us something about the subject matter at hand, can be 'intelligence'. But only after analysing and interpreting everything that was collected.

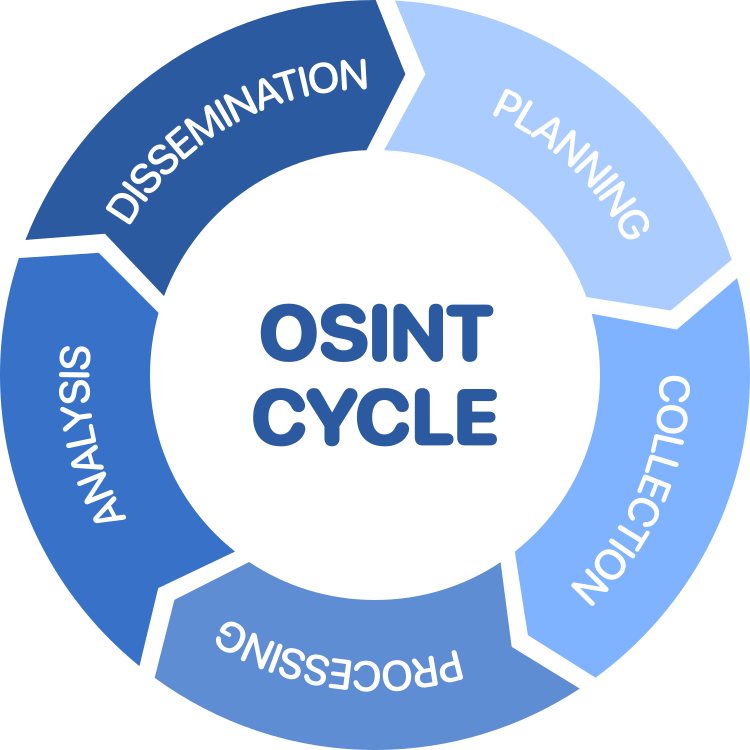

The OSINT Cycle

Within the OSINT methodology, we use the so called 'OSINT Cycle'. These are the steps that are followed during an investigation, and run from the planning stage to dissemination, or reporting. And after that, we can use that outcome for a new round if needed.

Let's look at all the steps individually first, to explain what happens within each step.

Planning

In the planning stage we prepare our research question, but also the requirements, objectives and goals. This is the moment we create a list of possible sources, tools that can help us collecting it, and what we expect or hope to find.

Collection

In this stage we collect the actual raw and unfiltered data from open sources. This can be from social media, public records, news papers, and anything else that is accessible both online and offline. Both manual labour as automated tools might be used to collecting the data needed.

Processing

The raw data is being processed, and its reliability and authenticity is checked. Preferably we use multiple sources to verify what is collected, and we try to minimize the amount of false positives during this stage. The information is then stored in an easy to read format, ready for further use during the investigation.

Analysis

The information is being examined to find meaningful, new insights or patterns within all the collected data. During the analysis stage we might identify fake data, remaining false positives, trends or outliers, and we might use tools to help analyse the information of visualise it.

Dissemination

In the last stage we publish meaningful information that was uncovered, the so called 'intelligence' part of it all. This new information can be used to be fed back into the cycle, or we publish a report of the findings, explaining where and how we uncovered the information.

During every step within the OSINT cycle we as an investigator are in charge, picking the sources that might yield the best results. Besides that we are fully aware of where and how the data is collected, so that we can use that knowledge during processing the data. We might be able to spot possible false positives, but since we know the sources used, we are able to describe the reliability and authenticity. When using automated analysis, we can pick and choose the algorithms that we want to use, and when visualising it we are the one using the tools to do so. When we finally report our findings, we can describe what information is found, where we have the duty to describe any information that can be used to prove or refute any research questions we had in the initial stage.

Quality of Information

To produce actionable intelligence, one needs to make sure that the data, or information, comes from a reliable and trusted source. When a new source of information is uncovered, there should be a moment of reflection, to see whether the source is not only reliable, but also authentic. When there is a reason to doubt the validity of information in any way, this should be taken into account. It might give the investigator the option to treat the information as 'intel-only', which means it cannot be used as evidence itself, but can be used as a new starting point to uncover new leads. And sometimes it is even possible to verify the information in a different way, thus giving more weight to it.

Within the field of data science and analytics, it is important that datasets meet the criteria for accuracy, completeness, validity, consistency, uniqueness, timeliness and fitness for purpose. I think it is important to go over a few of them, since they too are of importance to my story.

Accuracy

Data has to be accurate, and there should be a way to validate this by comparing it to a source.

Completeness

Like accuracy, the data has to be complete. When certain values are missing, it may lead to a misinterpretation of the data.

Uniqueness

Within the datasets you are working with, duplicate values should be kept to a minimum, or be avoided if possible.

Automated Tools

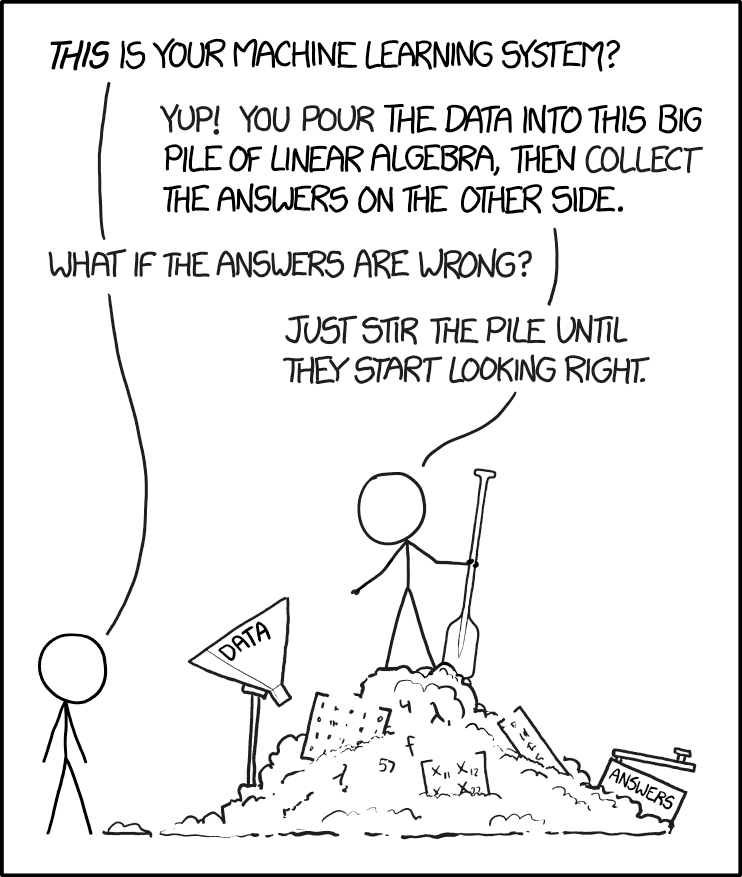

Now that I have covered some of the basics, I really would like to get to the point of this article. Because in my personal opinion there is a worrying development within the world of intelligence, something I like to call the 'black box' intelligence products. It can be a locally installed tool, but usually it is a web-based platform, and you can feed it snippets of information. After feeding it information, it gives you a list of seemingly related data points. Or as I like to describe it to people:

And this is where I start to have some issues. Okay, I have to admit it can be awesome, because within seconds you receive all the information you might need to propel your investigation forward. But... The intelligence cycle we are all familiar with, and which forms the basis of the field of intelligence, becomes invisible. Data is collected, but we usually don't know how, and sometimes even the source is unknown. After that it is processed, without us knowing in what way, not knowing how the integrity is being maintained. Some platforms even perform all sorts of analysis on the collected data, and creating an 'intelligence report' for you to use in your own intelligence cycle. But it will forever be unknown whether all sources and data points are mentioned, even the ones that point in a different direction. To refute or disprove something, is just as important as providing evidence that support a specific investigation.

Now please read back over the previous part where I explained a little bit about the basics of data science. I see several issues with these types of products or online platforms, so let's revisit a few important terms.

Accuracy

Some tools give you some basic pointers where the information comes from, like mentioning a social media platform or the name of a data breach. But that does not always give you enough information to actually verify it yourself. Because sometimes these companies use proprietary techniques, and not always in accordance to the terms of service of the target platform, to collect the data. But if it is impossible to verify the accuracy of the data, how do you weigh this? And if you work for law enforcement, I would like to ask: Do you include the accuracy in your report?

Completeness

Since the techniques of collecting the data is not always known, how do you know it is complete? Maybe there is more metadata available that is being discarded by the platform you use, but could be extremely important for your investigation. As with the accuracy, this might pose a problem further down the road, but in this case, you might not even be aware of it.

Uniqueness

Within large collections of breach data, it is not uncommon to find multiple entities that are connected to a single person, usually due to inaccuracies or errors during processing of the data. This could be mentioned under the "accuracy" header, but when working with a dataset that uses an email address as a unique identifier, it should always be unique. It is possible that someone is using multiple aliases, but when different natural persons are linked to a single email address, future pivot points might actually create problems in the long run.

Verification

When it comes to evidence that is gathered via open sources, especially when it is used in cases that involve serious crime, it is important to be able to independently verify the information or intelligence that is presented. This means that the data or information that is used as a basis for a decisions, is available for other parties to conduct independent research. When presenting something as a 'fact', without giving any context or sources, it should not even be in any report whatsoever. Only when there is an explanation about the steps taken to reach a certain conclusion, and when the information and steps are relevant to the case, something might be used as evidence.

When someone is tech-savvy enough to read source code, one can download and use a plethora of tools from GitHub to gather information from open sources. By reading the source code, one can understand the techniques that are used to retrieve certain data, making it possible to manually reproduce the steps, thus achieving the same result. But with proprietary tools and systems, that do not share any information on how they work, it becomes difficult or even impossible to verify certain findings, which makes it difficult to give weight to the information that is presented.

Final Thoughts

Tools can be extremely useful when we are collecting data, especially since the amount of information about an online entity is overwhelming. But I have noticed that when using automated platforms they do not always provide all the information needed for me to reproduce the steps to gather it manually. There are currently even platforms that do everything behind the scenes and provide a complete intelligence report at the end. In other words, the platforms have a vast amount of data already, they might perform live queries, they analyse, filter and process it, and produce those results in a report. What is shown in the end is the result of all the steps we normally perform by hand.

This means that we have to fully trust the platform or company that they are using the correct data, and process and analyse it in a meaningful and correct way for us to be able to use it. The difficult part of this is, that there isn't always a way to independently verify the output of these tools, since not all platforms share the techniques they used to retrieve certain information. And I am not so much talking about tools that provide a list of websites where an alias or an email address is used, because most of the times that information is rather easy to manually verify.

When you are thinking about using a tool that uses techniques you are not familiar with, or claims to have sources you don't have access to, then it won't hurt to do some research yourself, or simply ask them. Try to gain more knowledge about the tools, and how they work. That way you are better able to determine whether the way of working suits your organisation. There might even be the possibility to demand certain changes, to make sure that the product suit your needs, or workflow. And while you are thinking about using these tools, also be aware that you feed information into those tools too. If your organisation investigates certain adversaries, or might be of interest to certain governments, then don't forget to take that into consideration in your decision making process.

There are multiple 'magic black boxes' online or that can be installed locally that give you all sorts of information about any given entity. I have heard people refer to it as 'push-button OSINT', which describes this development rather nicely. These platforms can be extremely useful when you are a seasoned investigator, that knows how to verify all types of information via other means. But when you are a beginner, or don't have this knowledge yet, and use such platforms as a base for your investigation, then be aware that one day someone might show up and ask you how you found the information. How would you feel if the only explanation you can give is:

I would like to thank several people that have been helping me with this article, by giving me constructive feedback, and made sure I didn't forget anything that was worth mentioning. They are, in alphabetical order: